This task can be performed using Poison Pill

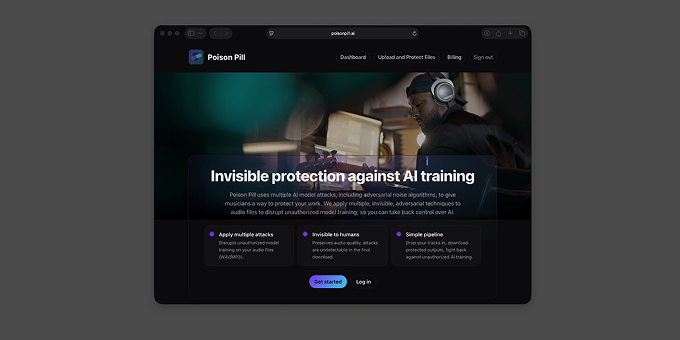

Invisible protection against AI training

Best product for this task

Poison Pill

music

AI companies are taking music without permission to train AI models sold as replacements for musicians. Poison Pill adds imperceptible noise into your music files, which messes with how AI models understand your music and makes them worse, unless they pay you for the originals.

Audioprotect audiomusic aiadversarial noiseadversarial noise generatoradversarial noise attackadversarial noise aiadversarial noise audioadversarial noise appai adversarial noise

What to expect from an ideal product

- Upload your tracks to Poison Pill before sharing them anywhere online to add protective noise that humans can't hear but trips up AI training systems

- The hidden audio changes make your music useless for training AI models while keeping it perfectly playable for real listeners on streaming platforms

- AI companies training on your protected files will get corrupted results that damage their models, forcing them to either pay for clean versions or skip your music entirely

- You can protect entire albums or individual songs in minutes, then distribute the protected versions through normal channels like Spotify, SoundCloud, or your website

- The protection stays embedded in your files permanently, so even if someone downloads and reshares your music, the AI-blocking noise travels with it