This task can be performed using Poison Pill

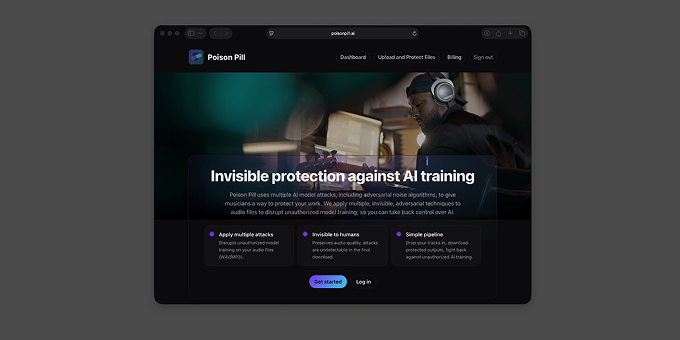

Invisible protection against AI training

Best product for this task

Poison Pill

music

AI companies are taking music without permission to train AI models sold as replacements for musicians. Poison Pill adds imperceptible noise into your music files, which messes with how AI models understand your music and makes them worse, unless they pay you for the originals.

Audioprotect audiomusic aiadversarial noiseadversarial noise generatoradversarial noise attackadversarial noise aiadversarial noise audioadversarial noise appai adversarial noise

What to expect from an ideal product

- Upload your music tracks to Poison Pill's platform to add protective noise that breaks AI training while keeping your songs sounding exactly the same to human ears

- Set your licensing terms and prices directly in the system so AI companies know exactly what they need to pay to access clean versions of your protected music

- Track which AI companies attempt to scrape or use your protected content through the platform's monitoring dashboard that shows unauthorized usage attempts

- Force AI companies to negotiate fair compensation since their models will produce terrible results when trained on your poison-protected music files

- Create a verifiable paper trail of ownership and licensing that holds up legally when AI companies try to claim they can use your music for free under fair use