This task can be performed using Poison Pill

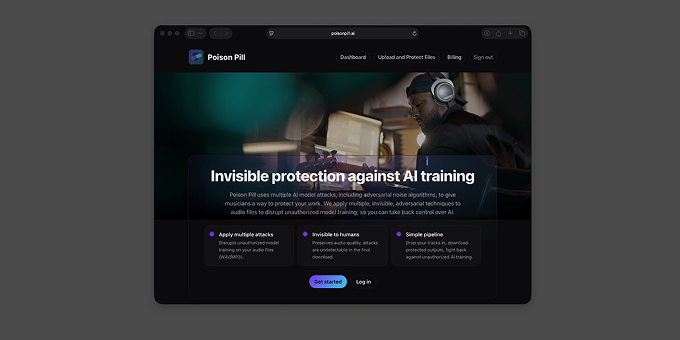

Invisible protection against AI training

Best product for this task

Poison Pill

music

AI companies are taking music without permission to train AI models sold as replacements for musicians. Poison Pill adds imperceptible noise into your music files, which messes with how AI models understand your music and makes them worse, unless they pay you for the originals.

Audioprotect audiomusic aiadversarial noiseadversarial noise generatoradversarial noise attackadversarial noise aiadversarial noise audioadversarial noise appai adversarial noise

What to expect from an ideal product

- Poison Pill embeds hidden audio signals into your music tracks that remain completely undetectable to human listeners but corrupt AI training processes

- The protection works by inserting carefully crafted noise patterns that cause AI models to learn incorrect associations and produce poor quality output when trained on your music

- You can apply these invisible watermarks to any audio format before uploading to streaming platforms or sharing online, creating a shield against unauthorized AI harvesting

- The corrupted data forces AI companies to either accept degraded model performance or negotiate proper licensing deals with original creators for clean training material

- Unlike visible watermarks that affect listening experience, this method preserves your music's sound quality while poisoning any attempt to steal it for machine learning datasets