ModelRed Reviews — Discover what people think of this product.

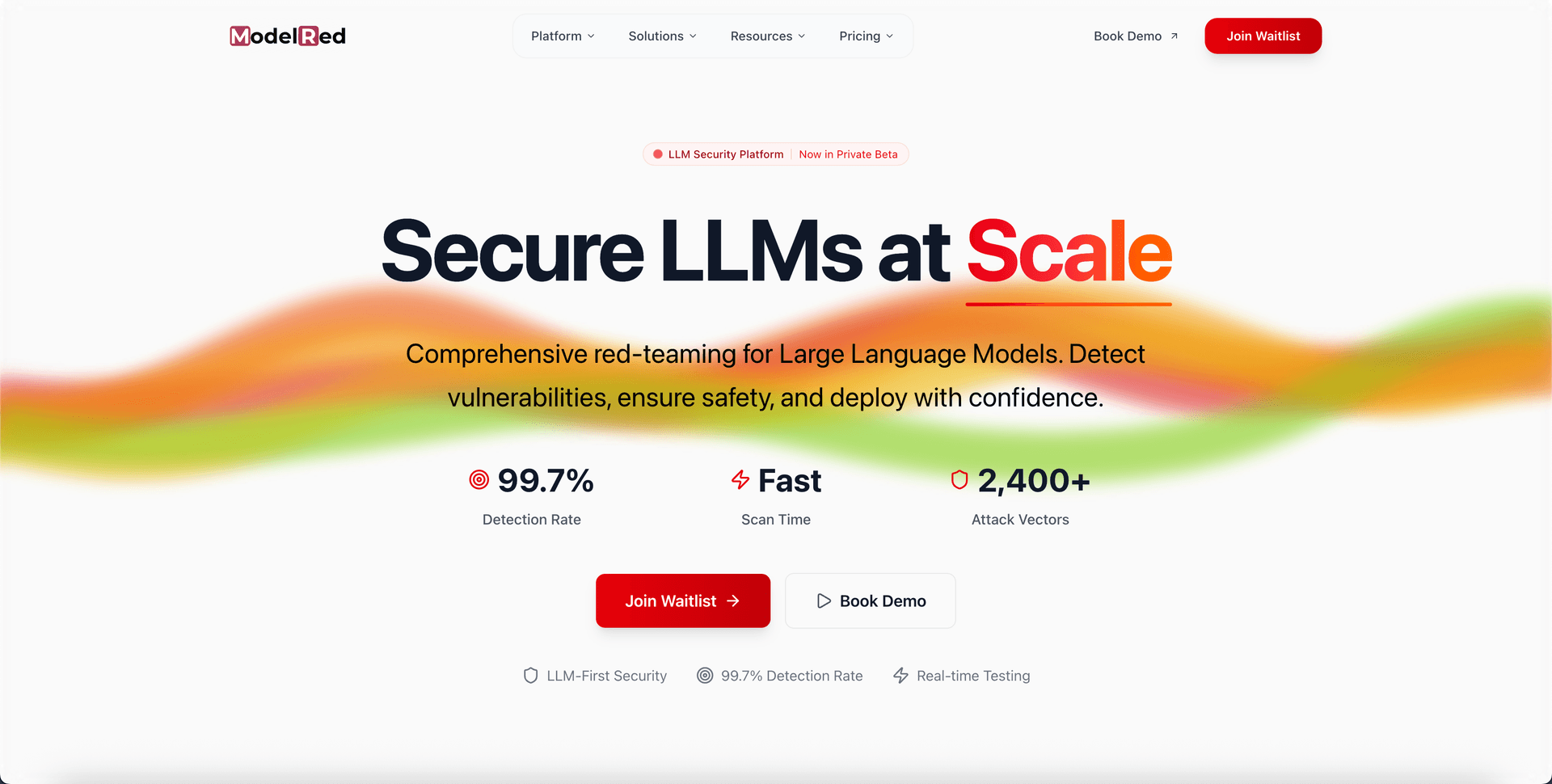

ModelRed

AI security testing for large language models

- supporters

What does ModelRed help with?

ModelRed is a security platform for organizations deploying large language models.

Companies are moving fast to integrate LLMs into production systems — from customer support to financial operations, healthcare, and internal tools. But security often takes a back seat. Attacks like prompt injections, jailbreaks, and data leaks are already common. Less obvious but equally dangerous are threats like model inversion (extracting sensitive training data), misuse of connected tools and APIs, exfiltration of hidden secrets, overconfident hallucinations in workflows, and poisoned datasets or malicious retrievals in RAG and fine-tuning pipelines. These risks can lead to data exposure, compliance failures, or costly business disruptions.

ModelRed helps organizations find and fix these vulnerabilities before attackers exploit them.

We’ve built 100+ automated probes that simulate adversarial behavior and stress-test models under real-world conditions. This goes beyond surface-level testing — our probes cover prompt injection variations, jailbreak chains, data extraction attempts, tool manipulation, safety bypasses, and more. Results are delivered in structured reports with actionable insights so teams can prioritize fixes.

Hyperfocal

Photography editing made easy.

Describe any style or idea

Turn it into a Lightroom preset

Awesome styles, in seconds.

Built by Jon·C·Phillips

Weekly Drops: Launches & Deals