This task can be performed using RayAI

RayAI: Scale AI agents, not your infrastructure headaches.

Best product for this task

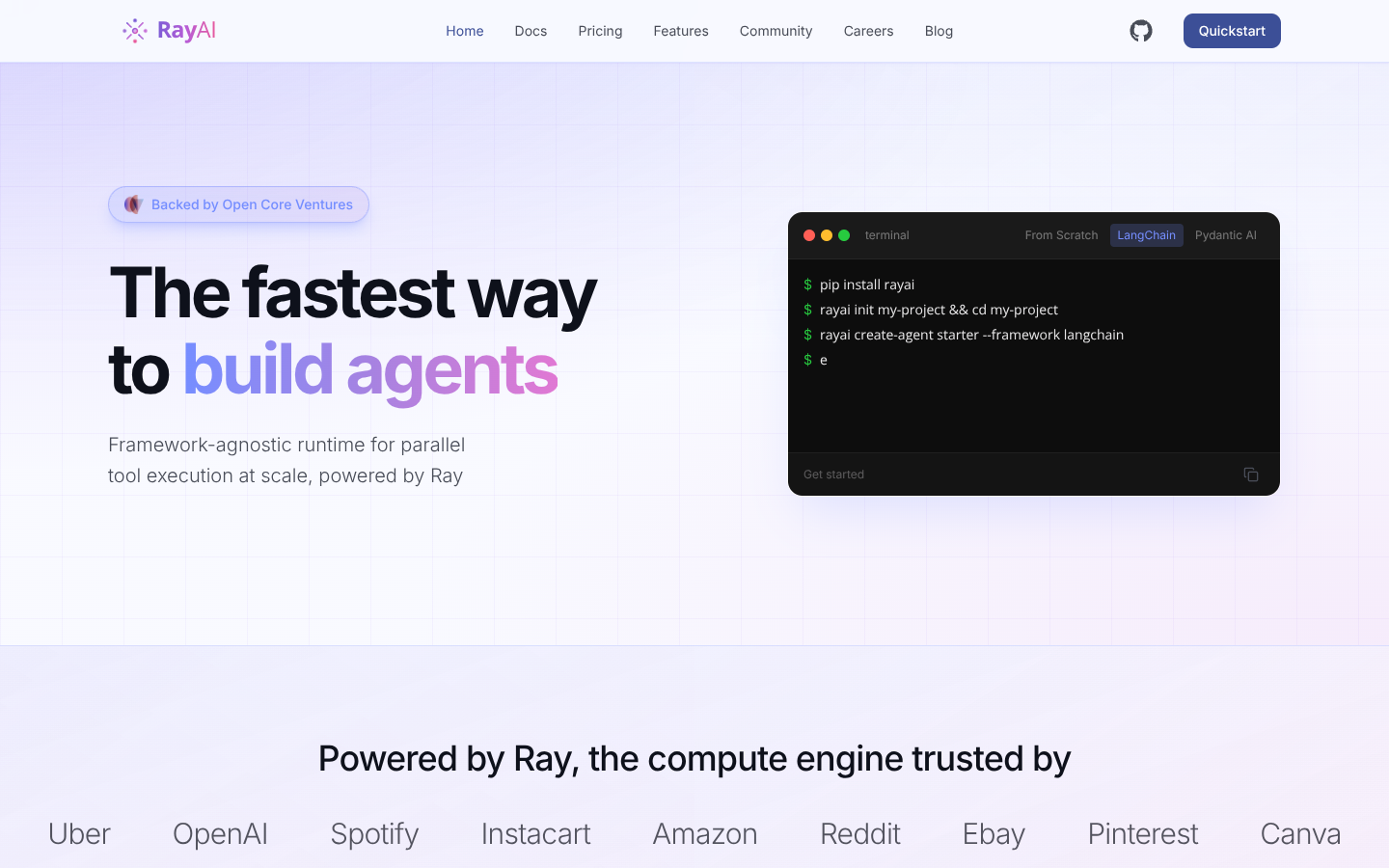

RayAI

ai

RayAI provides a Ray-powered runtime for scaling AI agents with distributed tool execution, sandboxed code, and fault-tolerant orchestration. It connects to popular agent frameworks while managing heterogeneous compute and multi-cloud deployment so teams can focus on agent logic instead of infrastructure.

What to expect from an ideal product

- RayAI automatically handles distributed deployment across multiple cloud providers so you don't need to write complex configuration files or manage server setups

- The platform connects directly to existing agent frameworks like LangChain and CrewAI, letting you scale without rewriting your agent code or learning new deployment tools

- Built-in fault tolerance keeps your agents running even when individual nodes fail, eliminating the need to build custom retry logic and monitoring systems

- Sandboxed execution environments automatically provision and manage compute resources based on your agent workloads, removing manual resource allocation tasks

- The Ray-powered runtime distributes tool execution across available infrastructure while you focus on agent behavior, not orchestrating where each task runs