This task can be performed using Mem0 ai

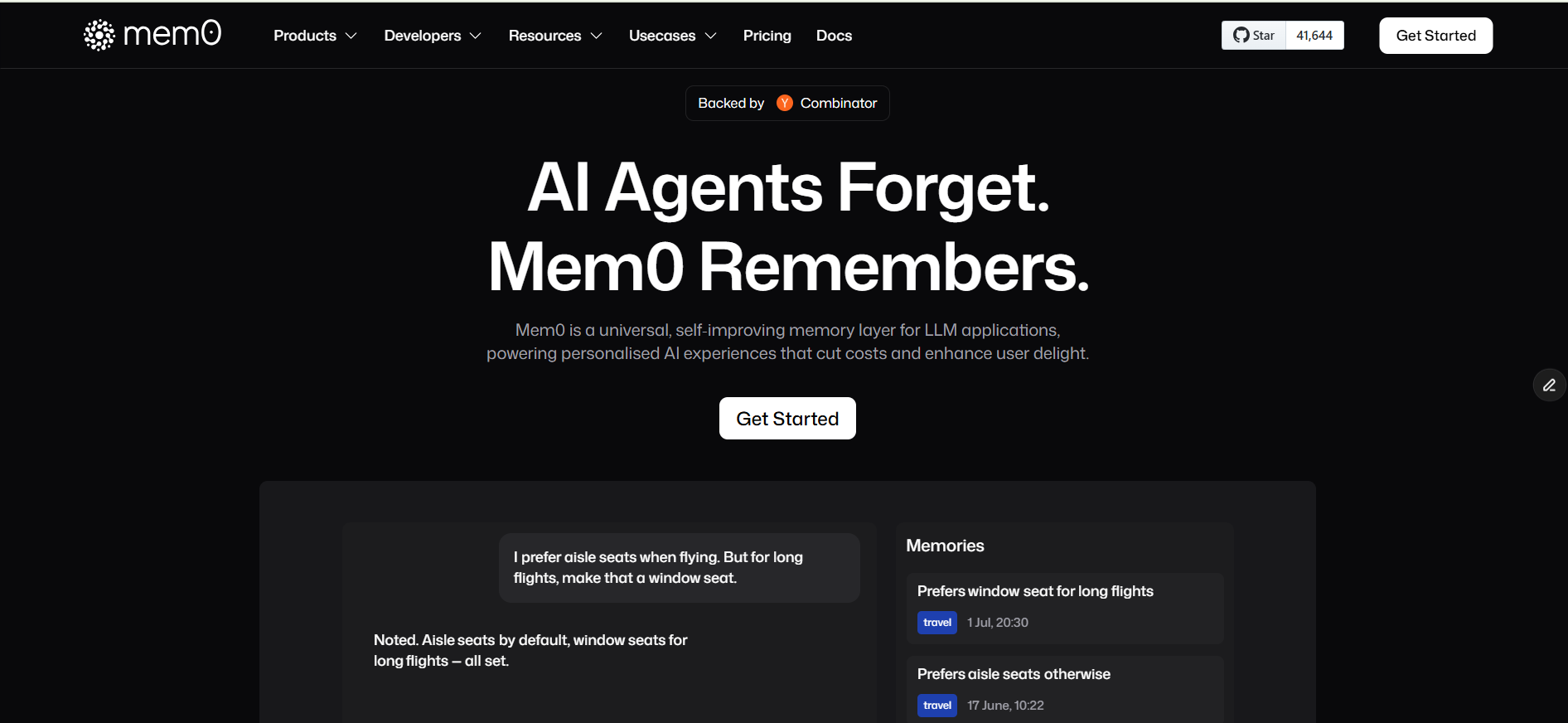

“AI Agents Forget. Mem0 Remembers.”

Best product for this task

Mem0 ai

dev-tools

Mem0 is a universal, self-improving memory layer for LLM-based applications, which stores and retrieves user interaction context to enable personalized AI experiences while cutting down token usage and costs.

What to expect from an ideal product

- Stores conversation history outside the main chat so you don't need to send the same context over and over again with each request

- Automatically pulls relevant past conversations when needed instead of including everything in every API call to save tokens

- Learns from user interactions to build a permanent memory that gets smarter over time without requiring constant context refreshing

- Keeps track of user preferences and past topics so your app can pick up where it left off without expensive token-heavy summaries

- Reduces the need to send long conversation threads by maintaining a separate memory layer that feeds context only when relevant