This task can be performed using Nanobot

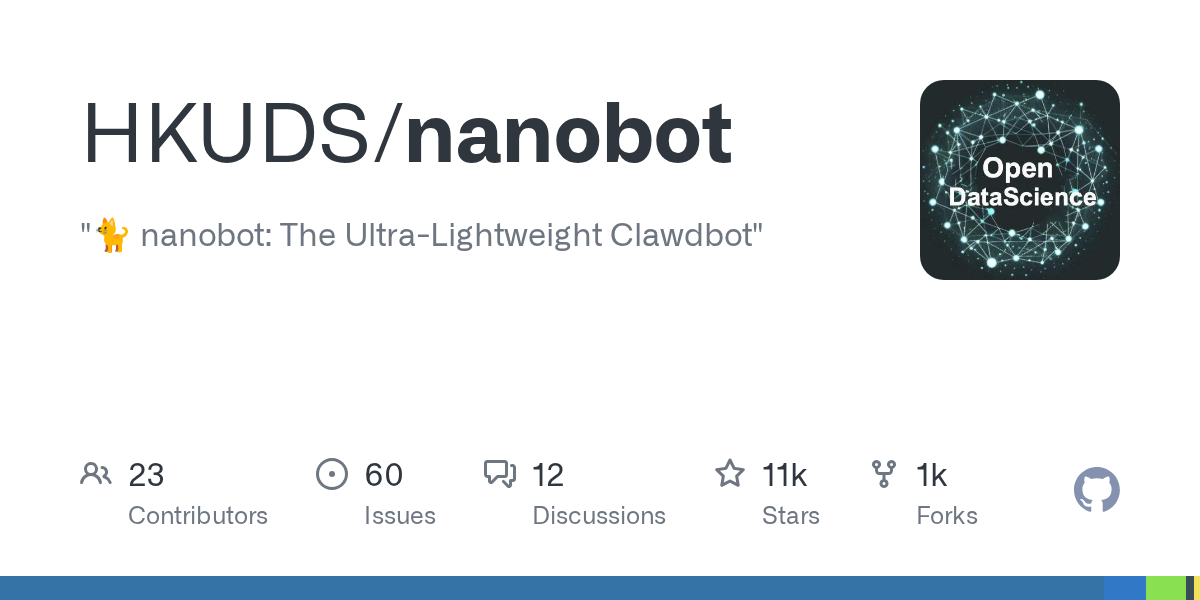

Nanobot: ultra-lightweight clawdbot for streamlined, minimal cat workflows

Best product for this task

Nanobot

oss

Nanobot is an ultra-lightweight personal AI assistant framework that delivers full agent capabilities in a compact, readable codebase. It supports multiple LLM providers, local vLLM servers, and task-focused agents for research, automation, and daily workflows with minimal configuration and fast deployment.

What to expect from an ideal product

- Nanobot connects to multiple LLM providers through a single configuration file, letting you switch between OpenAI, Anthropic, and other services without changing your code

- The framework includes built-in support for local vLLM servers, so you can run your own models alongside cloud providers in the same workflow

- Task-focused agents handle different parts of your automation pipeline, with each agent able to use different LLM backends based on what works best for that specific job

- Fast deployment means you can spin up new integrations in minutes rather than hours, with minimal setup required to add new LLM providers to your existing workflows

- The compact codebase makes it easy to customize how different LLM providers are called and managed, giving you full control over routing requests based on cost, speed, or capability needs