This task can be performed using Agenta

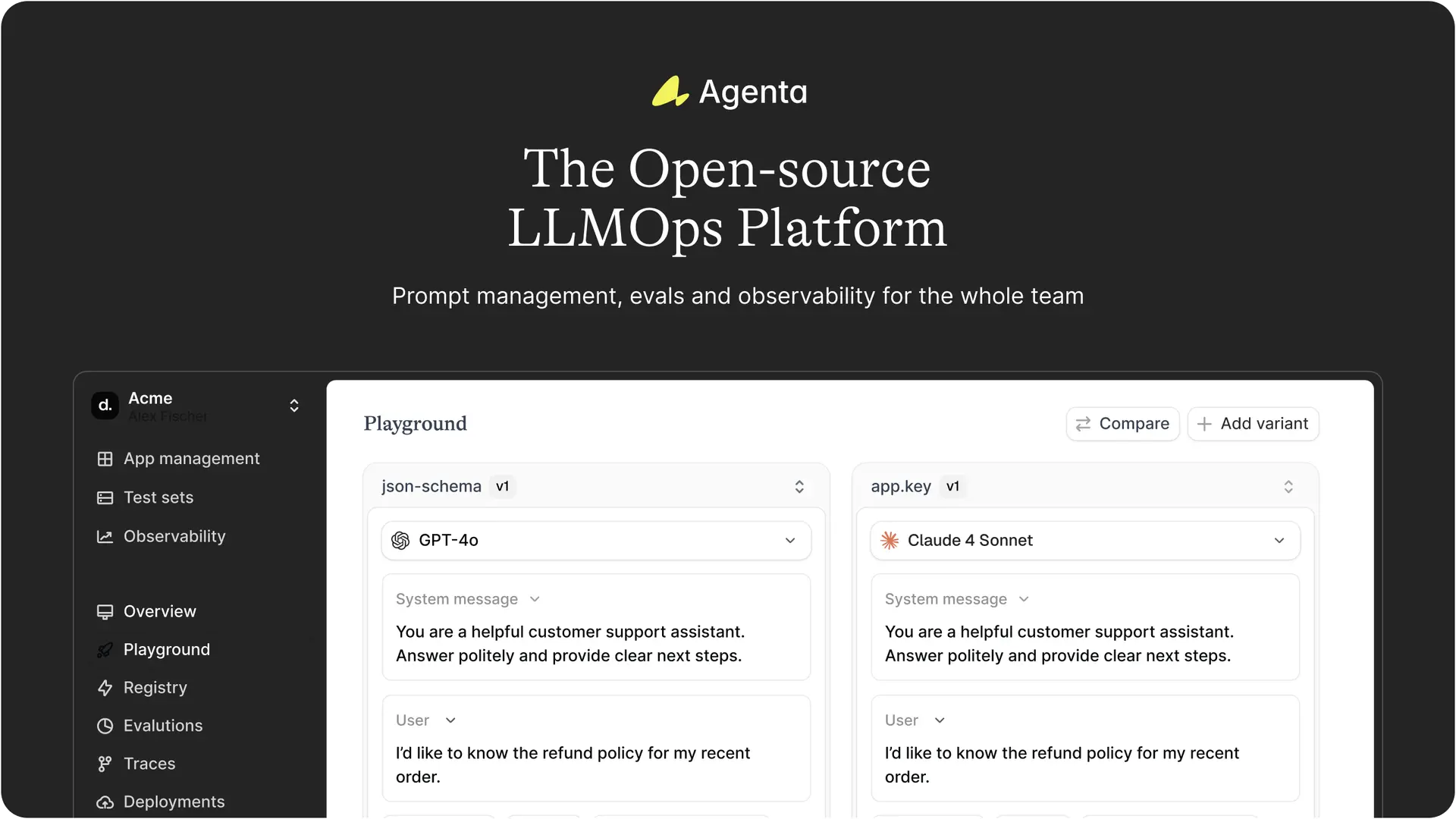

Agenta is an open-source LLMOps platform for building reliable AI apps. Manage prompts, run evaluations, and debug traces. We help developers and domain experts collaborate to ship LLM applications faster and with confidence.

Best product for this task

Agenta

dev-tools

Agenta is an open-source LLMOps platform that helps AI teams build and ship reliable LLM applications. Developers and subject matter experts work together to experiment with prompts, run evaluations, and debug production issues. The platform addresses a common problem: LLMs are unpredictable, and most teams lack the right processes. Prompts get scattered across tools. Teams work in silos and deploy without validation. When things break, debugging feels like guesswork. Agenta centralizes your LLM development workflow: Experiment: Compare prompts and models side by side. Track version history and debug with real production data. Evaluate: Replace guesswork with automated evaluations. Integrate LLM-as-a-judge, built-in evaluators, or your own code. Observe: Trace every request to find failure points. Turn any trace into a test with one click. Monitor production with live evaluations.

What to expect from an ideal product

- Run automated evaluations with LLM-as-a-judge or custom code to validate your app's responses before going live instead of relying on manual testing

- Compare different prompts and models side by side to find the best performing version, then track changes over time to prevent performance drops

- Test your LLM app with real production data to catch edge cases and problems that synthetic test data might miss

- Set up systematic evaluation processes that replace random testing with repeatable performance measurements you can trust

- Turn production traces into test cases with one click, so you can reproduce and fix issues before they impact users